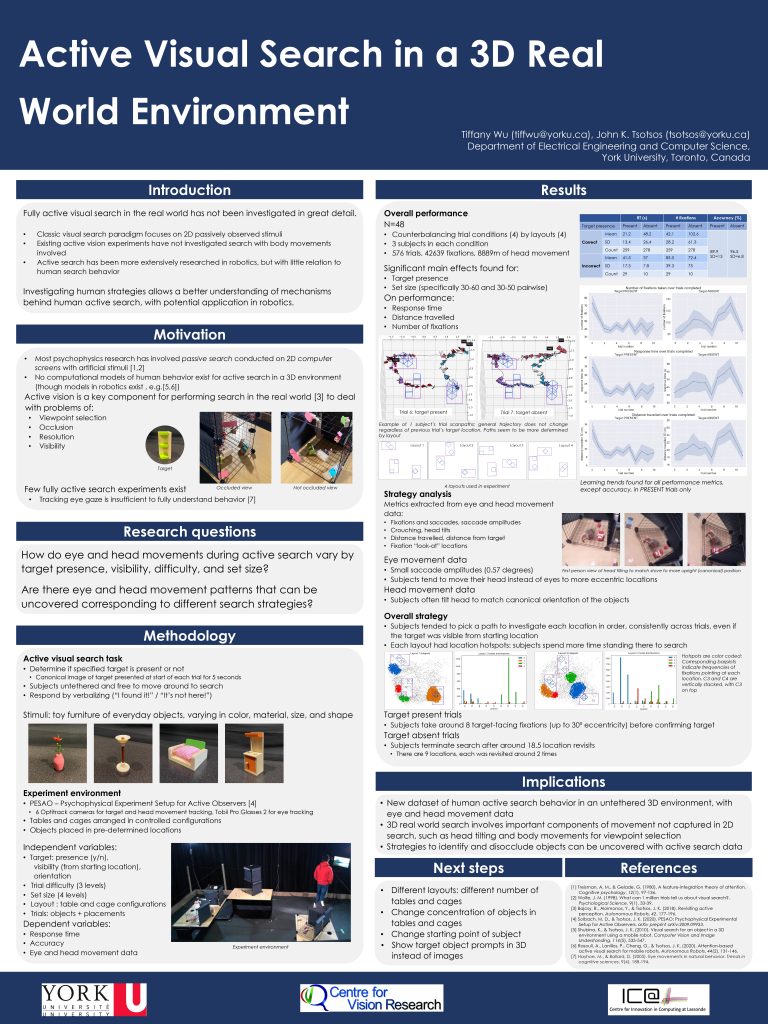

Tiffany Wu presents “Active Visual Search in a 3D Real World Environment” at RAW 2023

Venue:RAW 2023, Roverto Italy

Paper: Active Visual Search in a 3D Real World Environment

Abstract:

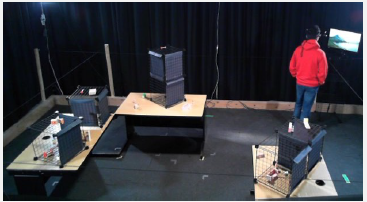

Active visual search in the real world has not been investigated in great detail. Whilst the visual search paradigm has been widely used since its popularization, most studies have not moved beyond the 2D, passive version of the task, where immobile subjects search through artificial stimuli presented on a screen. However, how much these studies’ results can be extrapolated to the real world is unclear, since targets may not be immediately visible, forcing observers to select viewpoints in order to search around occluding objects. To investigate whether the classic results hold true in the real world, an active visual search task was conducted in the 3D PESAO setup environment (Solbach & Tsotsos, 2020), with a 3x4m search space furnished with tables and wire cages. Observers moved freely, untethered, and their eye and head movements, reaction time, and accuracy, were precisely synchronized and measured over 12 trials each. The experimental stimuli were miniature everyday objects, scattered semi-randomly on the tables and cages, and observers had to navigate around them to conduct the search. Trial difficulty was manipulated by changing the number of shared features between the target object and distractors, and set size varied between 30-60 objects. Half the present targets were occluded from the starting viewpoint. Results indicate that similar to 2D search tasks, target absent trials take longer than present trials and require more fixations and head travel. Surprisingly, target occlusion has no significant effect on reaction time, and only marginal effects on number of fixations and distance traveled. Objects with non-upright orientations induced motions such as head tilting and crouching before declaring targets as present or absent. Our results provide a novel view at how humans perform unconstrained visual search in a 3D world and help lay foundations for our general ability for visuospatial problem solving.

Poster: