Motion

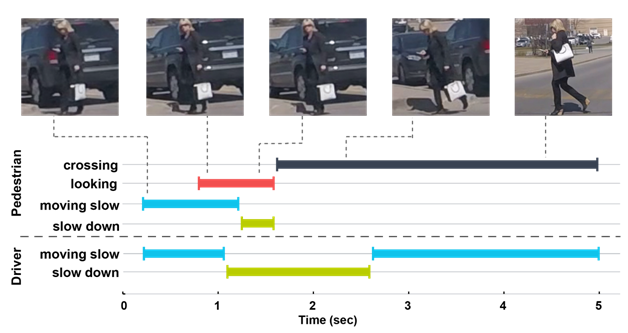

Pedestrian behavior understanding

The goal of this project is to observe and understand pedestrian actions at the time of crossing, and identify the factors that influence the way pedestrians make crossing decision. We intend to incorporate these factors into predictive models in order to improve prediction of pedestrian behavior.

Key Results

We found that join attention, i.e. when pedestrians make eye contact with drivers, is a strong indicator of crossing intention. We identified numerous factors, such as demographics, street structure, driver behavior, that impact pedestrian crossing behavior. The way pedestrians communicate their intention and the meaning of communication signals also were explored. Our initial experimentation suggests that including such information in practical applications can improve prediction of pedestrian behavior.

Publications

A. Rasouli and J. K. Tsotsos, “Autonomous vehicles that interact with pedestrians: A survey of theory and practice,” IEEE Transactions on Intelligent Transportation Systems, 2019.

A. Rasouli, I. Kotseruba, and J. K. Tsotsos, “Agreeing to cross: How drivers and pedestrians communicate,” In Proc. Intelligent Vehicles Symposium (IV), 2017, pp. 264–269.

A. Rasouli, I. Kotseruba, and J. K. Tsotsos,“Are they going to cross? a benchmark dataset and baseline for pedestrian crosswalk behavior,” In Proc. International Conference on Computer Vision (ICCV) Workshop, 2017, pp. 206–213.

A. Rasouli, I. Kotseruba, and J. K. Tsotsos, “Towards Social Autonomous Vehicles: Understanding Pedestrian-Driver Interactions,” In Proc. International Conference on Intelligent Transportation Systems (ITSC), pp. 729-734, 2018.

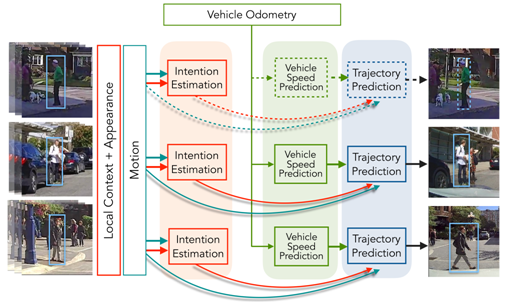

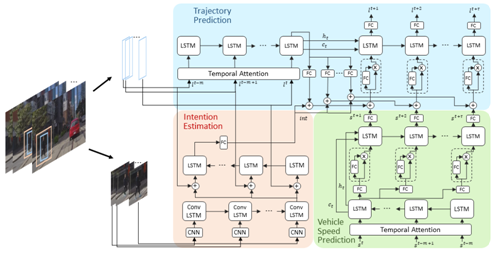

Pedestrian intention estimation

The objective of this project is to develop methods to predict underlying intention of pedestrians on the road. Understanding the intention helps distinguish between pedestrians that will potentially cross the street and the ones that will not do so, e.g. those waiting for a bus. To achieve this objective we want to establish a baseline by asking human participants to observe pedestrians under various conditions and tell us what the the intention of the pedestrians were. We want to use this information to train an intention.

Key Results

Humans are very good at estimating the intention of pedestrians. Overall, there is a high degree of agreement among human observers regarding the intentions of people on the road. From a practical perspective, we found that including a mechanism to estimate pedestrian intention in a trajectory prediction framework can improve the results.

Publications

A. Rasouli, I. Kotseruba, T. Kunic, and J. Tsotsos, “PIE: A Large-Scale Dataset and Models for Pedestrian Intention Estimation and Trajectory Prediction”, ICCV 2019

A. Rasouli, I. Kotseruba, and J. K. Tsotsos, “Pedestrian Action Anticipation using Contextual Feature Fusion in Stacked RNNs”, BMVC 2019.

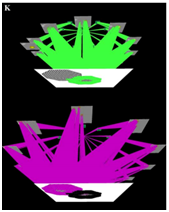

Selective Tuning of Convolutional Network for Affine Motion Pattern Classification and Localization

Tsotsos et al. (2004) present the first motion-processing model to include a multi-level decomposition with local spatial-derivatives of velocity and demonstrated localization of motion patterns using selective tuning. Later, Vintila and Tsotsos (2007) present motion detection and estimation for attending to visual motion using orientation tensor formalism method. Velocity field is extracted using a model with 9 quadrature filters. Here we try to develop a learning framework for these motions with the same goal: Classify affine motion patterns using convolutional network and localize motion patterns using selective tuning algorithm. Evaluate performance of different network architectures on these tasks.

Key Results

Publications

Vintila, F., Tsotsos, J.K., Motion Estimation using a General Purpose Neural Network Simulator for Visual Attention, IEEE Workshop: Applications of Computer Vision, Austin, Texas, Feb. 21-22, 2007.

Tsotsos, J.K., Liu, Y., Martinez-Trujillo, J., Pomplun, M., Simine, E., Zhou, K., Attending to Visual Motion, Computer Vision and Image Understanding 100(1-2), p 3 – 40, Oct. 2005.

Neurophysiology of Motion Processing

Ongoing experimental research on human visual motion perception is conducted with our collaborators Julio-Martinez-Trujillo of the University of Western Ontartio, Mazyar Fallah of York University and including researchers at the Hospital for Sick Children, Toronto, the Otto-von-Guericke University of Magdeburg, Germany, Georg-August-Universität, Göttingen, Germany, and the University of Iowa. They use both single cell recording techniques and brain imaging techniques to uncover details of the human and non-human primate motion processing architecture and also to test predictions of the Selective Tuning model.

Publications

Yoo, S.A., Martinez-Trujillo, J. Treue, S., Tsotsos, J.K., Fallah, M., Feature-based surround suppression in the motion direction domain (submitted)

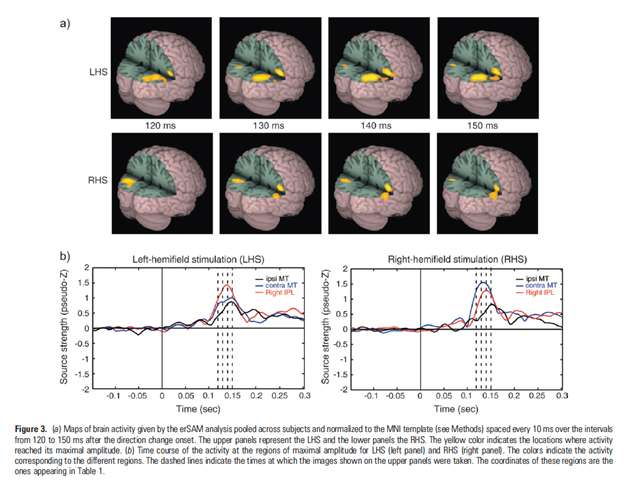

Martinez-Trujillo, J.C. Cheyne, D., Gaetz, W., Simine, E., Tsotsos, J.K. Activation of area MT/V5 and the right inferior parietal cortex during the discrimination of transient direction changes in translational motion, Cerebral Cortex, 17(7), p1733-9, 2007.

Martinez-Trujillo, J.C., Tsotsos, J.K., Simine, E., Pomplun, M., Wildes, R., Treue, S., Heinze, H.-J., Hopf, J.-M., Selectivity for Speed Gradients in Human Area MT/V5, NeuroReport 16(5), p435-438, Apr 4, 2005