Cognitive Programs

STAR-RT

A real-time implementation of STAR architecture for playing two web browser games, Canabalt and Robot Unicorn Attack, using only visual input. The goal was to implement a set of attentional mechanisms and visual processing pipeline to test the biologically-inspired concept of Cognitive Programs on a complex dynamic visual task.

Key results: our algorithm played on par with human expert players and achieved #18 ranking in Canabalt game; first proof-of-concept of Cognitive Programs on a realistic task; real-time implementation of multiple attention mechanisms.

Publications

I. Kotseruba, J.K. Tsotsos, “STAR-RT: Visual attention for real-time video game playing.” arXiv preprint arXiv:1711.09464 (2017).

J.K. Tsotsos, W. Kruijne. “Cognitive programs: software for attention’s executive.” Frontiers in psychology 5 (2014): 1260.

Learning by Composition and Exploration

Modern machine learning is largely predicated on several tacit beliefs. First, it is based on Turing’s 1950 assertion that “Instead of trying to produce a programme to simulate the adult mind, why not rather try to produce one which simulates the child’s? If this were then subjected to an appropriate course of education one would obtain the adult brain. Presumably the child brain is something like a note-book as one buys it from the stationers. Rather little mechanism, and lots of blank sheets. (Mechanism and writing are from our point of view almost synonymous.) Our hope is that there is so little mechanism in the child-brain that something like

it can be easily programmed. The amount of work in the education we can assume, as a first approximation, to be much the same as for the human child. ” In other words, it is assumed that humans learn by feeding their brains enough data, which for vision, means visual stimuli.

Second, the ‘feeding’ is done passively, that is, the system is a passive observer, much like a barnacle on a rock that simply waits for nourishment to come to it.

Third, the nature of learning is constant throughout the learning process, it does not change its mechanism or form.

These beliefs are all false when compared to human learning.

This project seeks to develop a new learning paradigm that takes these three beliefs and revises them so they are consistent with what is known about human perceptual and cognitive learning. The new paradigm which we term “Learning by Composition and Exploration – LCE” will be applied to the learning of Cognitive Programs, the updated version of the classic Ullman Visual Routines (see Cognitive Programs project page).

Basic tenets of this paradigm include:

• An intelligent system acquires data as it is required, while always being vigilant to its environment, and reasons about its role in fulfilling tasks.

• It features closed loop control where the system is an active agent within its environment and where the system interacts with it

• The system is attentive: it dynamically tunes itself for the task and context at hand. It learns from these actions to improve its performance for the next tasks (in this sense there is some commonality with the basics of reinforcement learning) • Active inductive inference seems a necessary mechanism to drive active exploration and requires a world model from which to draw inferences and direct their testing.

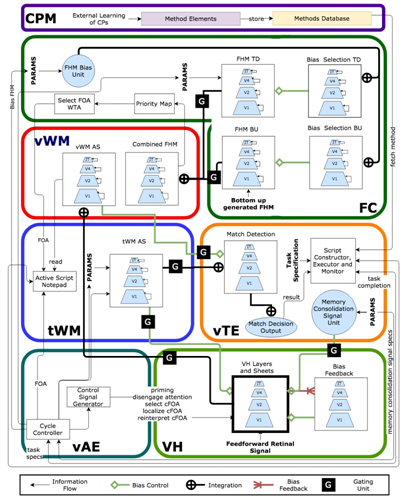

STAR – The Selective Tuning Attentive Reference Cognitive Architecture

What are the computational tasks that an executive controller for visual attention must solve? This question is posed in the context of the Selective Tuning model of attention. The range of required computations go beyond top-down bias signals or region-of-interest determinations, and must deal with overt and covert fixations, process timing and synchronization, information routing, memory, matching control to task, spatial localization, priming, and coordination of bottom-up with top-down information. During task execution, results must be monitored to ensure the expected results. This description includes the kinds of elements that are common in the control of any kind of complex machine or system. We seek a mechanistic integration of the above, in other words, algorithms that accomplish control. Such algorithms operate on representations, transforming a representation of one kind into another, which then forms the input to yet another algorithm. Cognitive Programs (CPs) are hypothesized to capture exactly such representational transformations via stepwise sequences of operations. CPs, an updated and modernized offspring of Ullman’s Visual Routines, impose an algorithmic structure to the set of attentional functions and play a role in the overall shaping of attentional modulation of the visual system so that it provides its best performance. This requires that we consider the visual system as a dynamic, yet general-purpose processor tuned to the task and input of the moment. This differs dramatically from the almost universal cognitive and computational views, which regard vision as a passively observing module to which simple questions about percepts can be posed, regardless of task. Differing from Visual Routines, CPs explicitly involve the critical elements of Visual Task Executive, Visual Attention Executive, and Visual Working Memory. Cognitive Programs provide the software that directs the actions of the Selective Tuning model of visual attention. The STAR architecture ties all of these elements together.

Publications

Abid, O., Cognitive Programs Memory – A framework for integrating executive control in STAR, M.Sc. Thesis, Dept. of Electrical Engineering and Computer Science, York University, December 12, 2018.

Rosenfeld, A., Tsotsos, J.K., Bridging Cognitive Programs and Machine Learning, arXiv Preprint arXiv:1802.06091, February 2018.

Kunic, T., Cognitive Program Compiler, M.Sc. Thesis, Dept. of Electrical Engineering and Computer Science, York University, April 11, 2017.

Tsotsos JK, Kruijne W (2014). Cognitive programs: Software for attention’s executive. Frontiers in Psychology: Cognition 5:1260. doi: 10.3389/fpsyg.2014.01260 (Special Issue on Theories of Visual Attention – linking cognition, neuropsychology, and neurophysiology, edited by S. Kyllingsbæk, C. Bundesen, S. Vangkilde)

Tsotsos JK. Cognitive programs: towards an executive controller for visual attention. F1000Posters 2013, 4:355 (poster)