Visual Search

Active Visual Search

The active projects are presented below. The lab as a rich history on this topic, represented by the following:

Shubina, K., Tsotsos, J.K. Visual Search for an Object in a 3D Environment using a Mobile Robot, Computer Vision and Image Understanding, 114, p535-547, 2010.

Tsotsos, J.K., Shubina, K., Attention and Visual Search: Active Robotic Vision Systems, International Conference on Computer Vision Systems, Bielefeld, Germany, March 21-24, 2007.

Ye, Y., Tsotsos, J.K., A Complexity Level Analysis of the Sensor Planning Task for Object Search, Computational Intelligence, 17(4), p605-620, Nov. 2001.

Ye, Y., Tsotsos, J.K., Sensor Planning for Object Search, Computer Vision and Image Understanding 73(2), p145-168, 1999.

Ye. Y., Tsotsos, J.K., Sensor Planning in 3D Object Search, Int. Symposium on Intelligent Robotic Systems, Lisbon, July 1996.

Ye, Y. Tsotsos, J.K., 3D Sensor Planning: Its Formulation and Complexity, International Symposium on Artificial Intelligence and Mathematics, January 1996.

Ye, Y., Tsotsos, J., Where to Look Next in 3D Object Search, IEEE International Symposium on Computer Vision, Coral Gables, Florida, November 1995, p539 – 544.

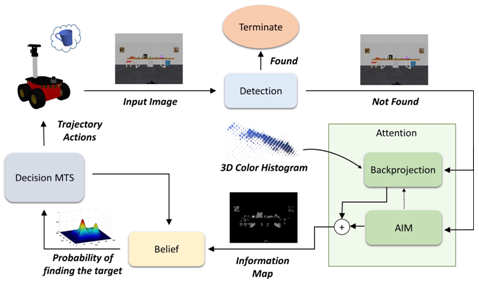

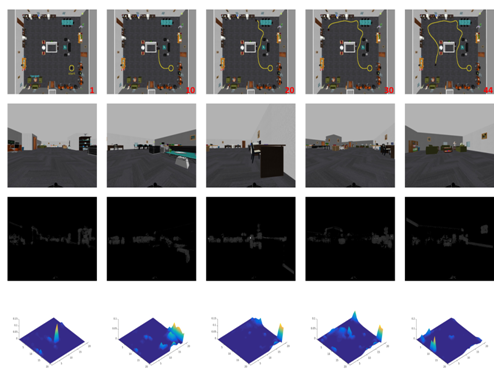

Autonomous visual search

The objective of this project is to examine how visual cues in an unknown environment can be extracted and used to improve the efficiency of visual search conducted by an autonomous mobile robot. We want to investigate different attentional mechanisms, such as bottom-up and top-down visual saliency, and their effects on the way the robot finds an object.

Key Results

Visual saliency, both bottom-up and top-down, was shown to significantly improve the visual search process in unknown environments. Saliency serves as a mechanism to identify cues that can lead the robot to locations where the target is more likely to be detected. The efficiency of saliency mechanisms, however, highly depends on the characteristics of the object, e.g. its similarity to the surrounding environment, and the structure of the search environment.

Publications

A. Rasouli, P. Lanillos, G. Cheng, and J. K. Tsotsos, “Attention-based active visual search for mobile robots,” The Journal of Autonomous Robots, 2019.

A. Rasouli and J. K. Tsotsos, “Integrating three mechanisms of visual attention for active visual search,” in 8th International Symposium on Attention in Cognitive Systems IROS, Hamburg, Germany, Sep. 2015.

A. Rasouli and J. K. Tsotsos, “Visual saliency improves autonomous visual search,” In Proc. Conference on Computer and Robot Vision (CRV), 2014, pp. 111–118.