Sang-Ah presents “Size of attentional suppressive surround” at VSS

Abstract:

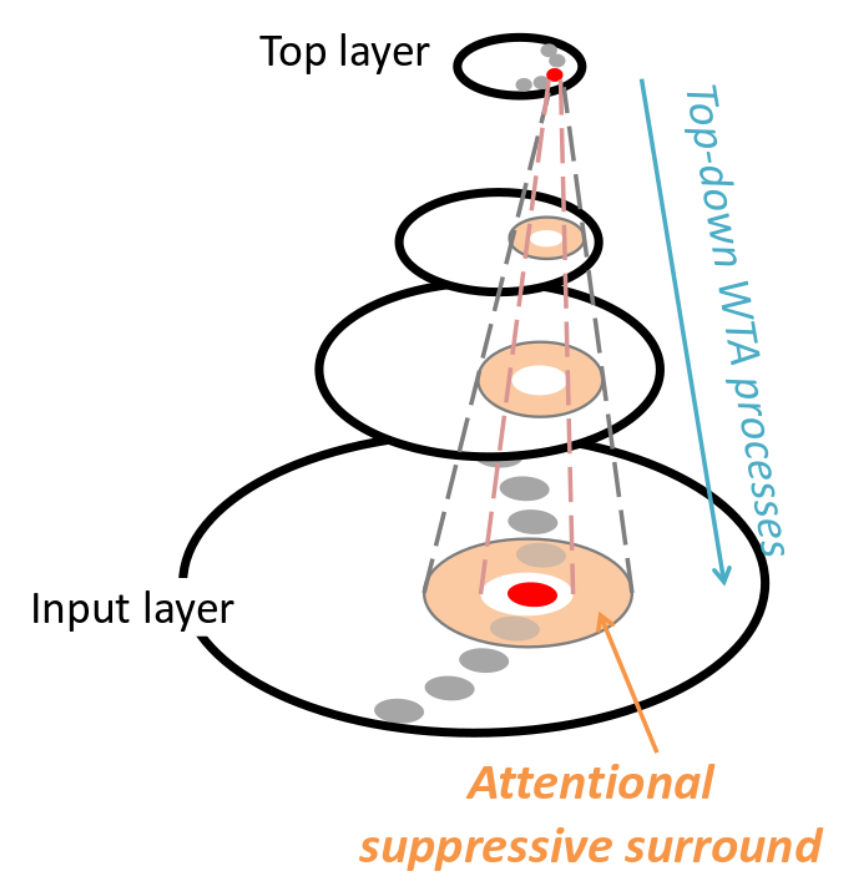

The Selective Tuning (ST) model (Tsotsos, 1995) proposed that the visual focus of attention is accompanied by a suppressive surround in spatial and feature dimensions. Follow-up studies have provided behavioural and neurophysiological evidence for this proposal (Carrasco, 2011; Tsotsos, 2011). ST also predicts that the size of the suppressive surround is determined by the level of processing within the visual hierarchy. We, thus, hypothesized that the size of the suppressive surround corresponds to the receptive field size of a neuron that best represents the attended stimulus. We conducted a free-viewing visual search task to test this hypothesis and used two different types of features processed at different levels – (early ventral) orientation and (late ventral) Greebles (Gauthier & Tarr, 1997). The sizes of search displays and stimuli were scaled depending on the feature levels to match the receptive field size of targeted neurons (V2 and LO). We tracked participants’ eye movements during free-viewing visual search to find rapid return saccades from a distractor to a target. During search, if attention falls on a distractor (D1) such that a target lies within its suppressive surround, that target is invisible until attention is released. An eye movement then reveals the target and triggers a return saccade (Sheinberg & Logothetis, 2001). In other words, shifting gaze from D1 to another distractor (D2) releases the target from the suppression and a short latency (return) saccade to target occurs. The distribution of distances between the target and D1 when return saccades occur provides a measure for the size of the suppressive surround. Searching for Greebles produced much larger suppressive surrounds than orientation. This indicates that the size of the suppressive surround reflects the processing level of the attended stimulus supporting ST’s original prediction.

The poster can be downloaded here.