Scene Classification in Indoor Environments for Robots using Word Embeddings

Abstract

Scene Classification has been addressed with numerous techniques in the computer vision literature. However with the increasing size of datasets in the field, it has become difficult to achieve high accuracy in the context of robotics. We overcome this problem and obtain good results through our approach. In our approach, we propose to address indoor Scene Classification task using a CNN model trained with a reduced pre-processed version of the Places365 dataset and an empirical analysis is done on a real world dataset that we built by capturing image sequences using a GoPro camera. We also report results obtained on a subset of the Places365 dataset using our approach and additionally show a deployment of our approach on a robot operating in a real world environment.

Dataset

Videos

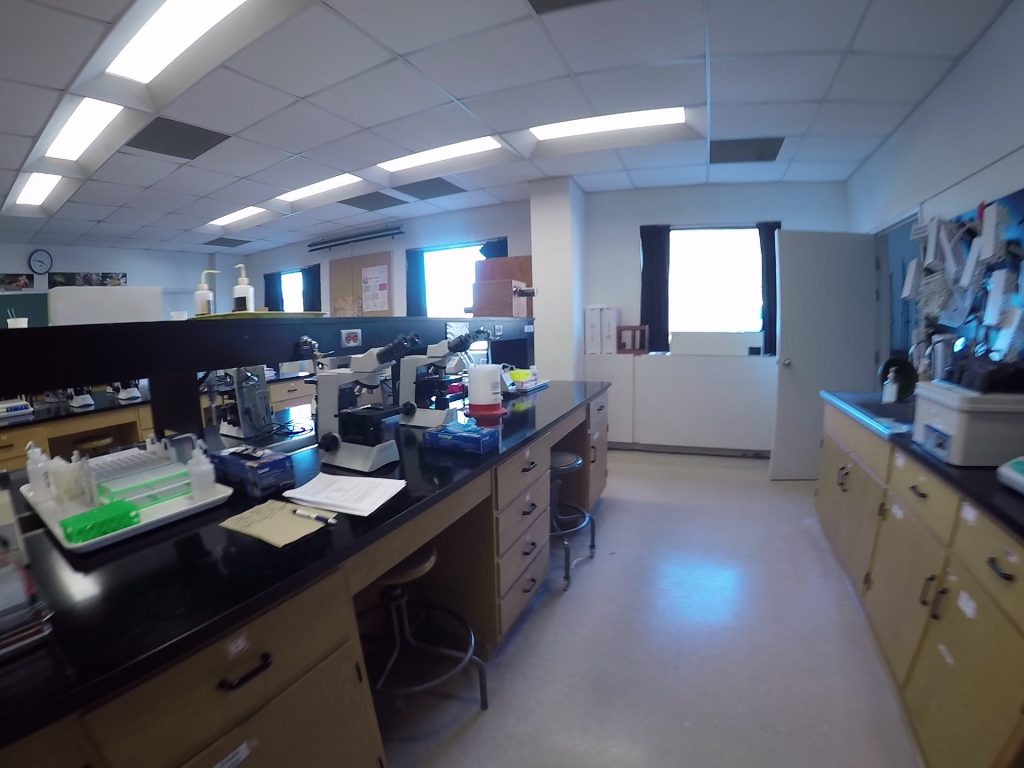

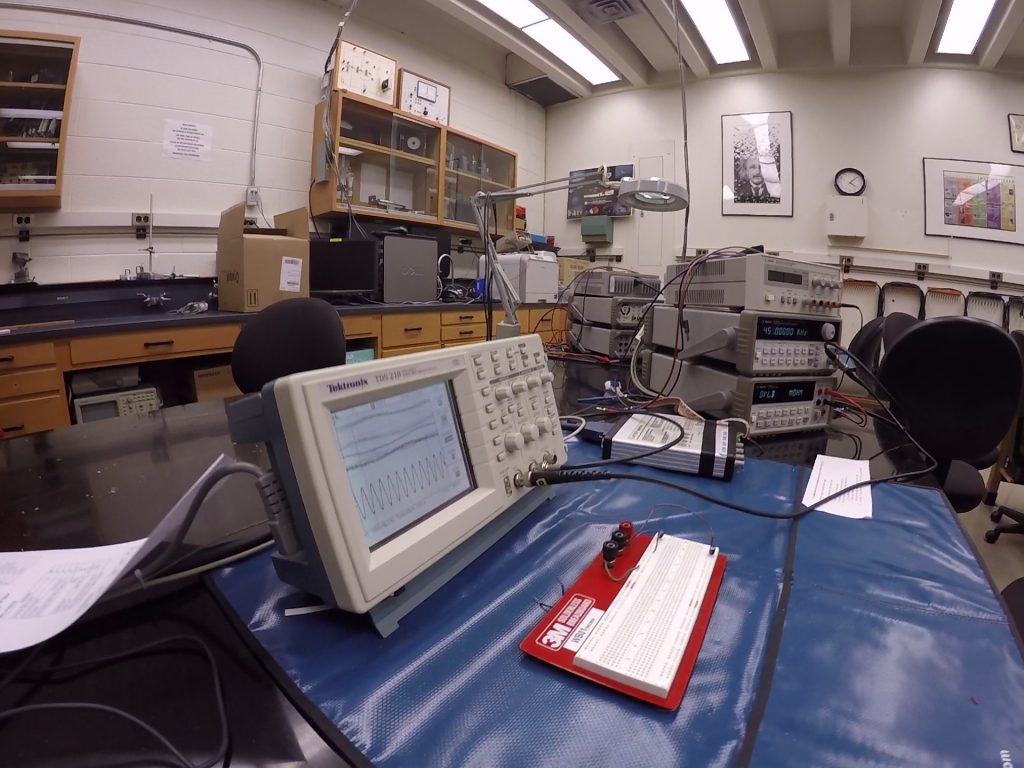

Sample Dataset Images